Title here

Summary here

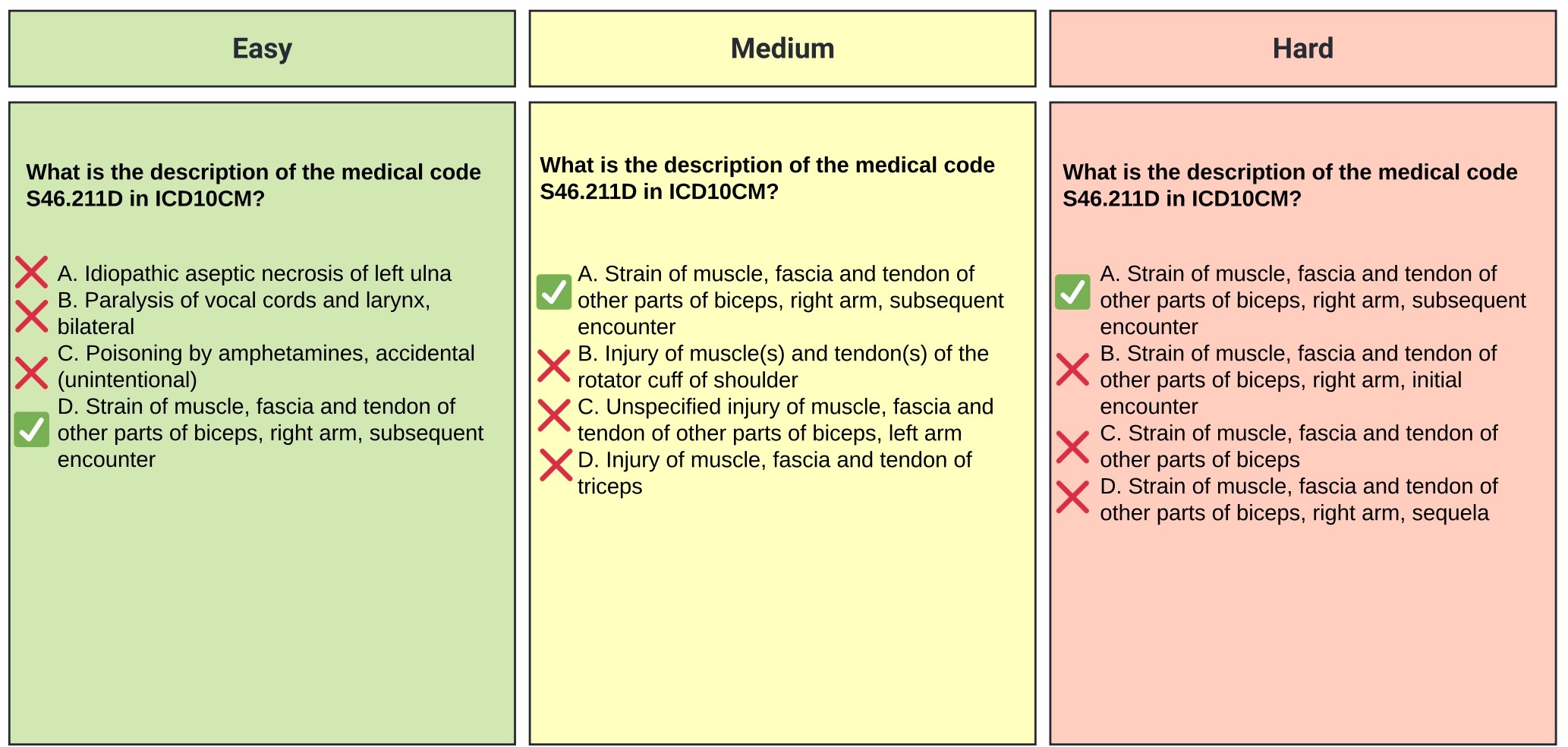

A Medical Concepts QA Dataset for LLM Evaluation

We evaluated different models on MedConceptsQA benchmark using our evaluation code that is available here. If you wish to submit your model for evaluation, please open a GitHub issue with your model's HuggingFace name here.

| Model Name | Accuracy | CI |

|---|---|---|

| gpt-4-0125-preview | 52.489 | 2.064 |

| meta-llama/Meta-Llama-3.1-70B-Instruct | 48.471 | 2.065 |

| m42-health/Llama3-Med42-70B | 47.093 | 2.062 |

| meta-llama/Meta-Llama-3-70B-Instruct | 47.076 | 2.062 |

| aaditya/Llama3-OpenBioLLM-70B | 41.849 | 2.039 |

| HPAI-BSC/Llama3.1-Aloe-Beta-8B | 38.462 | 2.010 |

| gpt-3.5-turbo | 37.058 | 1.996 |

| meta-llama/Meta-Llama-3-8B-Instruct | 34.8 | 1.968 |

| aaditya/Llama3-OpenBioLLM-8B | 29.431 | 1.883 |

| johnsnowlabs/JSL-MedMNX-7B | 28.649 | 1.868 |

| epfl-llm/meditron-70b | 28.133 | 1.858 |

| dmis-lab/meerkat-7b-v1.0 | 27.982 | 1.855 |

| BioMistral/BioMistral-7B-DARE | 26.836 | 1.831 |

| epfl-llm/meditron-7b | 26.107 | 1.814 |

| HPAI-BSC/Llama3.1-Aloe-Beta-70B | 25.929 | 1.811 |

| dmis-lab/biobert-v1.1 | 25.636 | 1.804 |

| UFNLP/gatortron-large | 25.298 | 1.796 |

| PharMolix/BioMedGPT-LM-7B | 24.924 | 1.787 |

| Model Name | Accuracy | CI |

|---|---|---|

| gpt-4-0125-preview | 61.911 | 3.475 |

| meta-llama/Meta-Llama-3.1-70B-Instruct | 58.720 | 3.523 |

| HPAI-BSC/Llama3.1-Aloe-Beta-70B | 58.142 | 3.530 |

| meta-llama/Meta-Llama-3-70B-Instruct | 57.867 | 3.534 |

| m42-health/Llama3-Med42-70B | 56.551 | 3.547 |

| aaditya/Llama3-OpenBioLLM-70B | 53.387 | 3.570 |

| HPAI-BSC/Llama3.1-Aloe-Beta-8B | 41.671 | 3.528 |

| gpt-3.5-turbo | 41.476 | 3.526 |

| meta-llama/Meta-Llama-3-8B-Instruct | 40.693 | 3.516 |

| aaditya/Llama3-OpenBioLLM-8B | 35.316 | 3.421 |

| epfl-llm/meditron-70b | 34.809 | 3.409 |

| johnsnowlabs/JSL-MedMNX-7B | 32.436 | 3.350 |

| BioMistral/BioMistral-7B-DARE | 28.702 | 3.237 |

| PharMolix/BioMedGPT-LM-7B | 28.204 | 3.220 |

| dmis-lab/meerkat-7b-v1.0 | 28.187 | 3.219 |

| epfl-llm/meditron-7b | 26.231 | 3.148 |

| dmis-lab/biobert-v1.1 | 25.982 | 3.138 |

| UFNLP/gatortron-large | 25.093 | 3.102 |

@article{SHOHAM2024109089,

title = {MedConceptsQA: Open source medical concepts QA benchmark},

journal = {Computers in Biology and Medicine},

volume = {182},

pages = {109089},

year = {2024},

issn = {0010-4825},

doi = {https://doi.org/10.1016/j.compbiomed.2024.109089},

url = {https://www.sciencedirect.com/science/article/pii/S0010482524011740},

author = {Ofir Ben Shoham and Nadav Rappoport}

}